Quite a simple subject today, but like all this stuff, it’s a bit more interesting when you zoom in a bit.

How do we see? Well, as everyone knows, eyes are like cameras. There is a lens at the front that focuses an upside down image on your retina, just like the film or CCD at the back of a camera. Obvious right? God or evolution designed it, humans copied it. Problem solved. That’s how we see.

But all that your eyes actually do is they put a flat image into your head of the light that is reflected from objects in the real world. Now that’s a pretty clever trick, and it is complicated enough to have been cited as a proof of intelligent design and therefore the existence of God. That was before archaeology showed just how the eye actually has evolved from light sensitive cells, not once but numerous times with different types of eyes. Not only that, but at least twice eyes like ours have evolved with convergent results. Cephalopoda (that’s squids and octopuses to you and me) have eyes almost identical to mammalian eyes geometrically, but different enough structurally to show that we don’t share a common eye-enabled ancestor.

In fact as scientists look at the evolution of the eye, they discover that the earliest type of light sensitive perception evolved before the first brain. Think about it; a brain wouldn’t have had much to do unless it had some means of understanding the environment. So it makes sense that it needed eyes, or ears or some input device, and keyboards definitely weren’t around at that time. From then on, like the partnership between dogs and humans, it seems that eyes and brains evolved together, each influencing and encouraging the development of the other.

What happens to that little upside down picture that has been cast by your lens onto the retina anyway? Presumably, because the image on your retina is upside down, your optic nerve includes a 180 degree twist? But where does it go after that? Does it lead to a little screen inside your head where mini-you is looking at it to tell the brain what’s going on? Well surprisingly, it doesn’t work like that at all. After splitting up so both eyes are messaging both halves of your brain, the other end of the optic nerve fans out, leading to all sorts of places.

So let’s look at an infant. All those colours and shadows in three dimensions are carefully focussed into a little picture, which is then sent out to lots of different places in our little friend’s head to be made sense of. By the time we are old enough to write and read stuff like this, the process of understanding the world we live in is so familiar with us that it seems it must always have felt like this. But actually it takes us humans months before we learn how to interpret that information, and understand that it represents the world.

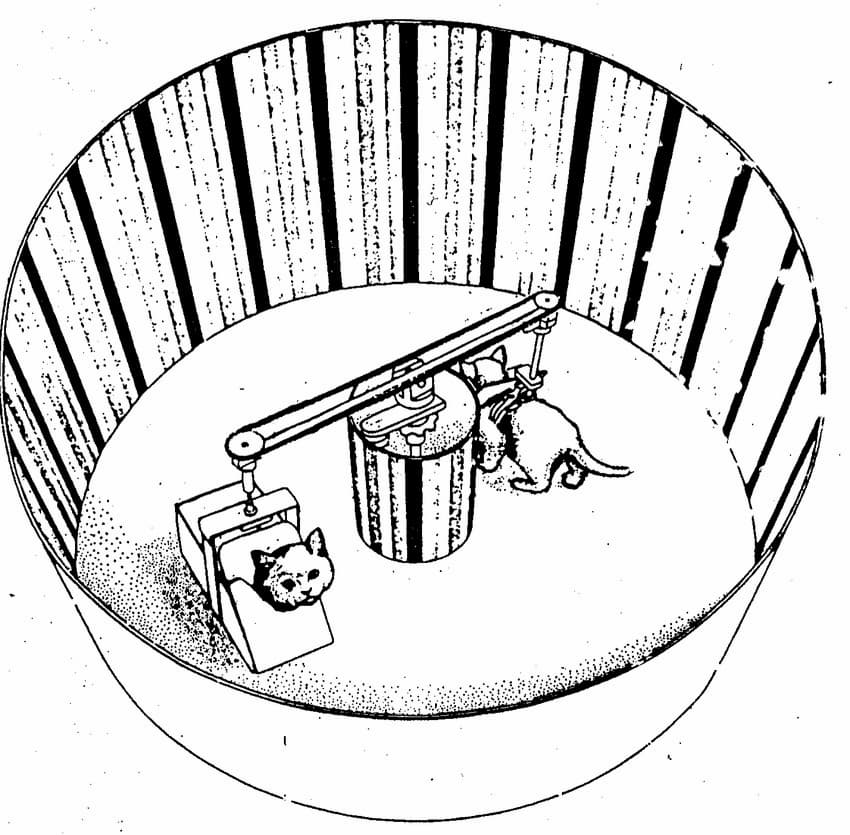

There is a great little experiment that was done by Held and Hein in the early 1960s. I guess it would be undoable now, but I don’t think I’m being cruelly exploitative to the participating kittens by referring to it here 60 years later.

Two infant fluffballs opened their little eyes for the first time to discover that they were suspended in baskets from a rod. This horizontal rod was pivoted in the middle and there were systems of pulleys and chains arranged so that each of the kittens saw exactly the same things in front of their eyes. Imaginatively in the age of op art, they were given black and white lines to look at. I would have chosen other stuff like balls of string and mice, but whatever.

One of the kittens’ legs poked out through the bottom of its cradle and so it was able to move about. It could walk around clockwise or anticlockwise around the central pivot. It could also turn on its own axis and move towards and away from the centre point as well as crouch down and stretch up while seeing its surroundings. The other kitten’s basket was driven by gears and stuff so it was subject to an identical set of visual stimuli and moved about in exactly the same way, but this second kitten’s legs were kept within its cradle so it had no way of relating what it saw with what it did. And after six weeks, our scientists were able to establish that the second kitten remained effectively blind while the first had been learning to see and explore.

This isn’t really so surprising. Thirteen years earlier, the Austrian researcher Theodor Erismann had put inverting goggles on his assistant Ivo Kohler to turn everything he saw upside down. Kohler first of all stumbled about comically, but in less than two weeks he was able to ride a bicycle. Not only that, but you can watch the original video-documented experiment on Youtube today. Within a couple of weeks Kohler was perceiving the world as it is. See – I have swapped the word see for perceive. Was he seeing it as it was? Well that depends on definitions I suppose.

So although it feels natural to us that the world is as we see it, what we actually have is a way of using the fact that light conveniently travels in straight lines to give us enough information to make a data matrix. This is sufficient for us to decode and use in our interactions with our environment. We end up with the feeling that we know what the world looks like but we don’t really. Colour for example is an interpretation we make of the way certain types of energy transmission bounce off certain chemicals. Colour doesn’t exist ‘out there’ It only exists by the interactions between the world and our perception.

What about the physical geometry of the world, if not its colour? Well, like the kittens, that is something that we can test in other ways. We compare our real world experience of other senses and our motion with what we see, so we can be pretty sure that if we see a brick wall we shouldn’t drive into it whatever colour it may or may not be.

Let’s look at the geometry of the world at a more detailed level. If we see images of textures that look like kitten fur we know that the feeling will be soft; whereas things that glow may be too hot to touch. The point being that we know this not because we are given a hard wired index of ‘fur’ = ‘soft’ and ‘glowing’ = ‘hot’ at birth, but because we learn these things as we go along, just as the kittens learned (or didn’t) that moving their cute little paws in a certain way carried them towards those delightful black lines.

So what about bats; how does that work? Well we learn to perceive the real world by interpreting the light that comes to us having been reflected from its surfaces. Maybe sunlight, maybe artificial light. But the point is that the light that bounces off what we are looking at (or maybe is refracted through it, same difference for the point I’m making) comes from something outside of us. That doesn’t work in caves or on dark nights.

What is good about light as a way of picking up information is that it travels in straight lines. This means that we learn to do what you might call empirical triangulation. So we can work out where stuff is. We can’t do that with sound because as we know, sound wraps its way around obstacles and we can hear birdsong through the window. But actually that’s not really because light is light and sound is sound, it’s more to do with wavelength.

Visible light travels in waves about 500 nanometres long, that’s half of a thousandth of a millimetre, or a hundredth of the thickness of a single strand of kitten fur. So we can use light to recognise fur because those waves don’t wash around stuff that is so much bigger than they are. On the other hand a sound wave, say middle C has a wavelength of about 1.3 metres and the A at the bottom of the piano, about 12 metres, so those audible sounds are easily able to wrap around obstacles and get to our ears. The lower the pitch, the more they find their way around and through stuff, which is why you can hear the bass so clearly from that hot hatch going down the high street.

Now, we might hear a fly buzzing, but we couldn’t possibly use that sound to work out where it is, at least not accurately enough to catch it. Light is much better than that. But if you’re a bat in a dark cave, you don’t have light. Meanwhile sound that is audible to humans is no good for measuring any geometry with a resolution smaller than a block the size of a car. So if you’re a bat, what you do is you make your own waves, and send them out and check the echoes. For that, you want your waves to be as short as you can make them. So bats make noise pulses with a wavelength about 3mm long and then compare the echo with the source to calculate distance and direction. Now 3mm is not as good as sunlight, but it’s short enough to bounce off an insect, and that’s all the bat needs.

So how do bats do all that maths? Of course they don’t. Just as kittens don’t do acceleration calculations when they pounce on mice. It’s all stuff that is learned by the animal who needs it. The ‘less intelligent’ the animal the more it is hard wired, the more intelligent it is (and for ‘more intelligent’ you can read ‘more incapable at birth’) the less is hardwired and more is learned.

Bat’s don’t bump into things, unless they are chasing them, and they can fly a whole lot better than humans. So it’s pretty clear that a bat’s perception of the geometry of the world in extremely fast real time is as good for it, as ours is for us.

And what of the next era of intelligent life on this planet? How does artificial intelligence learn to see? Well, the short answer is, it learns. When you tell the Captcha robot that a certain picture contains a traffic light, you think you are passing a test to prove to a robot that you aren’t one? No, not really. If this robot could already tell what a traffic light was, and what wasn’t, then we could safely assume that the robot punter trying to get through the Captcha test could also tell. No, what we are doing when we do those tests is we are teaching the AI behind Captcha what traffic lights look like in the real world. That information is sold to the self-driving automobile industry.

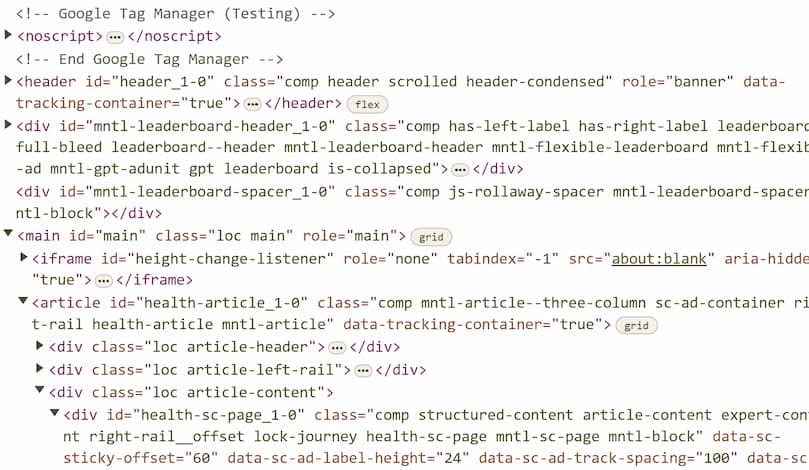

Did you ever see what websites look like before your browser interprets them? They are files of text. These contain somewhere between twice and ten times as much gobbledygook markup and stylesheet characters as the visible text you see displayed on the screen. Markup is what the ‘m’ in ‘html’ stands for, and this code comprises interminable lists of short jumbles of letters and punctuation marks that tell the browser how to interpret and display the page in desktop and mobile screens.

It also describes what will happen when you scroll it, click it, or whatever. If you look at a chunk of source code for a web page it’s difficult to see what’s going on. So imagine that you ask your favourite AI chatbot to find you all the information about whatever it is you want to know about. It has to wade through all of this stuff to find the content for you. But how else could it do that? Just as you don’t have a little person in your head looking at a screen at the far end of your optic nerve, large language models don’t have their own mini-me looking at browser screens to navigate through web pages. Or do they?

Well they’re starting to. Some AI multi-modal models have been tasked with finding information both by using computer vision to read the image that the browser creates alongside the direct analysis of html source code. And we are getting to the stage where it can actually be quicker for the AI to look at the page image rather than search through the code. I suppose that’s not so surprising, when you think that webpages are made to be read by animals with eyes and hands rather than by whatever kind of animal it is who likes reading digital source code, but I still find it a bit weird.

It’s just a question of how we, bats, and bots, each learn to perceive the environment in our respective caves and what use we make of all the information. I have a feeling though that when we start giving intelligent computer models more control over what they are actually doing themselves. Then just like those kittens, that’s when they will really start learning what it means to live on this remarkable planet.